Aims and Scope

Research on Intelligent Manufacturing and Assembly (RIMA) (eISSN: 2972-3329) is an international, peer-reviewed, open access journal dedicated to the latest advancements in intelligent manufacturing and assembly. RIMA serves as a critical bridge between cutting-edge research and practical applications, fostering collaboration between the academic community and industry practitioners. The journal aims to publish high-impact research that pushes the boundaries of knowledge in the design, analysis, manufacturing, and operation of intelligent systems and equipment. RIMA focuses on innovative technologies and methodologies that are transforming the manufacturing landscape, driving efficiency, precision, and sustainability in industrial processes. By publishing rigorous research and fostering a vibrant community of scholars and practitioners, RIMA aims to be the go-to resource for advancing the state-of-the-art in intelligent manufacturing and assembly.

Topics of interest include, but are not limited to the following:

• Digital design and manufacturing

• Theories, methods, and systems for intelligent design

• Advanced processing techniques

• Modelling, control, optimization, and scheduling of systems

• Manufacturing system simulation and digital twin technology

• Industrial control systems and the industrial Internet of Things (IIoT)

• Safety and reliability assessment

• Robotics and automation

• Artificial intelligence and machine learning in manufacturing

• Supply chain optimization and management

• Additive manufacturing and materials science

• Cybersecurity and data privacy in manufacturing

• Sustainability and circular economy in manufacturing

• Bio-fabrication and other advanced manufacturing methods

• Digital Workforce and Automation

• etc.

Current Issue

Research Article

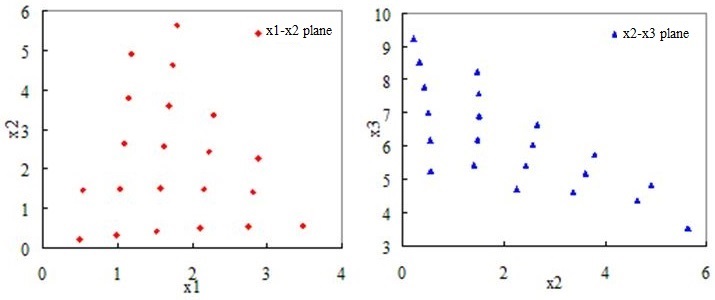

This paper presents the application of probabilistic multi-objective optimization method (PMOO) in enterprise production management, which involves the simultaneous optimization of "high long-term profit target" and "small investment amount". PMOO method is an effective approach to deal with multi-objective optimization problems from the viewpoint of system theory and method of probability theory, in which the new concept of "preferable probability" is introduced to formulate the methodology of PMOO. In PMOO, the evaluated attributes (objectives) of candidates are preliminarily divided into two basic types: beneficial attributes and unbeneficial attributes, and the corresponding quantitative evaluation method of partial preferable probability of each type of attribute is established. Furthermore, the total preferable probability of each candidate alternative is the product of partial preferable probabilities of all possible attributes, and the maximum value of the total preferable probability presents the overall optimization of the system. In the enterprise production management problem of three kinds of products, the objective function is to maximize the long-term profit target and minimize the investment amount, the discretization of Hua's "good lattice point" and uniform mixture design are applied to simplify the optimization process and data processing. Finally, a rational result is obtained.

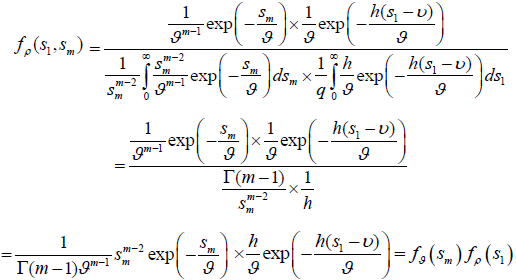

The technique used here emphasizes pivotal quantities and ancillary statistics relevant for obtaining statistical predictive or confidence decisions for anticipated outcomes of applied stochastic models under parametric uncertainty and is applicable whenever the statistical problem is invariant under a group of transformations that acts transitively on the parameter space. It does not require the construction of any tables and is applicable whether the experimental data are complete or Type II censored. The proposed technique is based on a probability transformation and pivotal quantity averaging to solve real-life problems in all areas including engineering, science, industry, automation & robotics, business & finance, medicine and biomedicine. It is conceptually simple and easy to use.

| eISSN: 2972-3329 Abbreviation: Res Intell Manuf Assem Editor-in-Chief: Prof. Matthew Chin Heng Chua (Singapore) Publishing Frequency: Continuous publication Article Processing Charges (APC): 0 Publishing Model: Open Access |

Maosheng Zheng, Jie Yu

Maosheng Zheng, Jie Yu