Holding teachers accountable: An old-fashioned, dry, and boring perspective

Main Article Content

Abstract

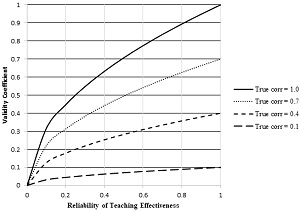

Few would disagree with the desirability to hold teachers accountable, but student evaluations of teaching and department head evaluations of teaching fail to do the job validly. Although this may be due, in part, to difficulties conceptualizing teaching effectiveness and student learning, it also is due to insufficient attention to measurement reliability. Measurement reliability sets an upper bound on measurement validity, thereby guaranteeing that unreliable measures of teaching effectiveness are invalid too. In turn, for measures of teaching effectiveness to be reliable, the items in the measure must correlate well with each other, there must be many items, or both. Unfortunately, at most universities, those who are tasked with teaching assessment do not understand the basics of psychometrics, thereby rendering their assessments of teachers invalid. To ameliorate unsatisfactory assessment procedures, the present article addresses the relationship between reliability and validity, some requirements of reliable and valid measures, and the psychometric implications for current teaching assessment practices.

Article Details

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License.

References

- Stroebe W . Student Evaluations of Teaching Encourages Poor Teaching and Contributes to Grade Inflation: A Theoretical and Empirical Analysis. Basic and Applied Social Psychology, 2020, 42(4): 276-294. https://doi.org/10.1080/01973533.2020.1756817

- Hanushek EA and Steven GR. Generalzatons about usng value-added measures of teacher qualty. American Economic Review, 2010, 100(2): 267-271. https://doi.org/10.1257/aer.100.2.267

- Crocker L and Algina J. Introduction to classical & modern test theory. United States: Wadsworth, 1986.

- Rosenthal R and Rosnow RL. Essentials of behavioral research: Methods and data analysis. Boston, M. A.: McGraw-Hill, 2008.

- McLeod SA. What is reliability?. Simply Psychology, 2007. https://www.simplypsychology.org/reliability.html

- Spearman C. The proof and measurement of association between two things. International Journal of Epidemiology, 2010, 39(5): 1137-1150. https://doi.org/10.1093/ije/dyq191

- Gulliksen H. Theory of mental tests. Hillsdale, NJ: Lawrence Erlbaum Associates, 1987.

- Lord FM and Novick MR. Statistical theories of mental test scores. Reading, MA: Addison-Wesley, 1968.

- Abrami PC, d’Apollonia S and Cohen PA. Validity of student ratings of instruction: What we know and what we do not. Journal of Educational Psychology, 1990, 82(2): 219-231. https://doi.org/10.1037/0022-0663.82.2.219

- Clayson DE. Student evaluations of teaching: Are they related to what students learn?. Journal of Marketing Education, 2009, 31(1): 16-30. https://doi.org/10.1177/0273475308324086

- Clayson DE, Frost TF and Sheffet MJ. Grades and the student evaluation of instruction: A test of the reciprocity effect. Academy of Management Learning & Education, 2006, 5(1): 52-65. https://doi.org/10.5465/amle.2006.20388384

- Uttl B, White CA and Gonzalez DW. Meta-analysis of faculty’s teaching effectiveness: Student evaluation of teaching rating and student learning are not related. Studies in Educational Evaluation, 2017, 54: 22-42. https://doi.org/10.1016/j.stueduc.2016.08.007

- Boehmer DM and Wood WC. Student vs. faculty perspectives on quality instruction: Gender bias, “hotness”, and “easiness” in evaluating teaching. Journal of Education for Business, 2017, 92(4): 173-178. https://doi.org/10.1080/08832323.2017.1313189

- Felton J, Koper PT, Mitchell J, et al. Attractiveness, easiness and other issues: Student evaluations of professors on Ratemyprofesssors.com. Assessment & Evaluation in Higher Education, 2008, 33(1): 45-61. https://doi.org/10.1080/02602930601122803

- Fisher AN, Stinson DA and Kalajdzic A. Unpacking backlash: Individual and contextual moderators of bias against female professors. Basic and Applied Social Psychology, 2019, 41(5): 305-325. https://doi.org/10.1080/01973533.2019.1652178

- Freng S and Webber D. Turning up the heat on online teaching evaluations: Does “hotness” matter. Teaching of Psychology, 2009, 36(3): 189-193. https://doi.org/10.1080/00986280902959739

- Hamermesh DS and Parker A. Beauty in the classroom: instructors’ pulchritude and putative pedagogical productivity. Economics of Education Review, 2005, 24(4): 369-376. https://doi.org/10.1016/j.econedurev.2004.07.013

- Johnson RR and Crews AD. My professor is hot! Correlates of RateMyProfessors.com ratings for criminal justice and criminology faculty members. American Journal of Criminal Justice, 2013, 38(4): 639-656. https://doi.org/10.1007/s12103-012-9186-y

- Riniolo TK, Johnson k, Sherman T, et al. Hot or not: Do professors perceived as physically attractive receive higher student evaluations. The Journal of General Psychology, 2006, 133(1): 19-35. https://doi.org/10.3200/GENP.133.1.19-35

- Rosen AS. Correlations, trends and potential biases among publicly accessible web-based student evaluations of teaching: a large-scale study of RateMyProfessors.com data. Assessment & Evaluation in Higher Education, 2018, 43(1): 31-44. https://doi.org/10.1080/02602938.2016.1276155

- Wolbring T and Riordan P. How beauty works. Theoretical mechanism and two empirical applications on students’ evaluation of teaching. Social Science Research, 2016, 57: 253-273. https://doi.org/10.1016/j.ssresearch.2015.12.009

- Gurung RAR and Vespia KM. Looking good, teaching well? Linking liking, looks, and learning. Teaching of Psychology, 2007, 34(1): 5-10. https://doi.org/10.1177/009862830703400102

- Delucchi M. Don’t worry. Be happy: Instructor likability, student perceptions of learning, and teacher ratings in upper-level sociology courses. Teaching Sociology, 2000, 28(3): 220-231. https://doi.org/10.2307/1318991

- Feistauer D and Richter T. Validity of students’ evaluations of teaching: Biasing effects of likability and prior subject interest. Studies in Educational Evaluation, 2018, 59: 168-178. https://doi.org/10.1016/j.stueduc.2018.07.009

- Reysen S. Construction of a new scale: The Reysen likability scale. Social Behavior and Personality: An International Journal, 2005, 23: 2001-2208. https://doi.org/10.1037/t66417-000

- McPherson MA and Jewell R. Leveling the playing field: Should student evaluation scores be adjusted? Social Science Quarterly, 2007, 88(3): 868-881. https://doi.org/10.1111/j.1540-6237.2007.00487.x

- Reid LD. The role of perceived race and gender in the evaluation of college teaching on RateMyProfessors. Journal of Diversity in Higher Education, 2010, 3(3): 137-153. https://doi.org/10.1037/a0019865

- Smith BP. Student ratings of teacher effectiveness: An analysis of end-of-curse faculty evaluation. College Student Journal, 2007, 41: 788-800.

- Arceo-Gomez EO and Campos-Vazquez RM. Gender stereotypes: The case of MisProfesores.com in Mexico. Economics of Education Review, 2019, 72: 55-65. https://doi.org/10.1016/j.econedurev.2019.05.007

- Boring A. Gender biases in student evaluations of teaching. Journal of Public Economics, 2017, 145: 27-41. https://doi.org/10.1016/j.jpubeco.2016.11.006

- Mengel F, Sauermann J and Z¨olitz U. Gender bias in teaching evaluation. Journal of the European Economic Association, 2019, 17(2): 535-566. https://doi.org/10.1093/jeea/jvx057

- Cronbach LJ. Coefficient alpha and the internal structure of tests. Psychometrika, 1951, 16(3): 297- 334. https://doi.org/10.1007/BF02310555